🟢 Who Hasn't Used the Fastest Language Large Model in History, Currently Free!

Groq, a new large model experience, is based on the open-source Mixtral 8x7B-32k. Its highlight is its super-fast response speed of 500T/s, achieved using their newly developed LPU.

At the beginning of 2024, AI continues to make headlines! Google released the new generation model, Gemini 1.5 pro, followed by the globally attention-grabbing Sora. Meanwhile, Groq, claiming to be the world's fastest LLM model, also emerged! Its website is straightforward, with no registration or payment required, just a prompt input box. Pressing Enter gives you an instant response, a sensation everyone experiences with Groq! The experience link is at the end. Let's explore this 500 Token/s Groq!

Super Fast Response Experience

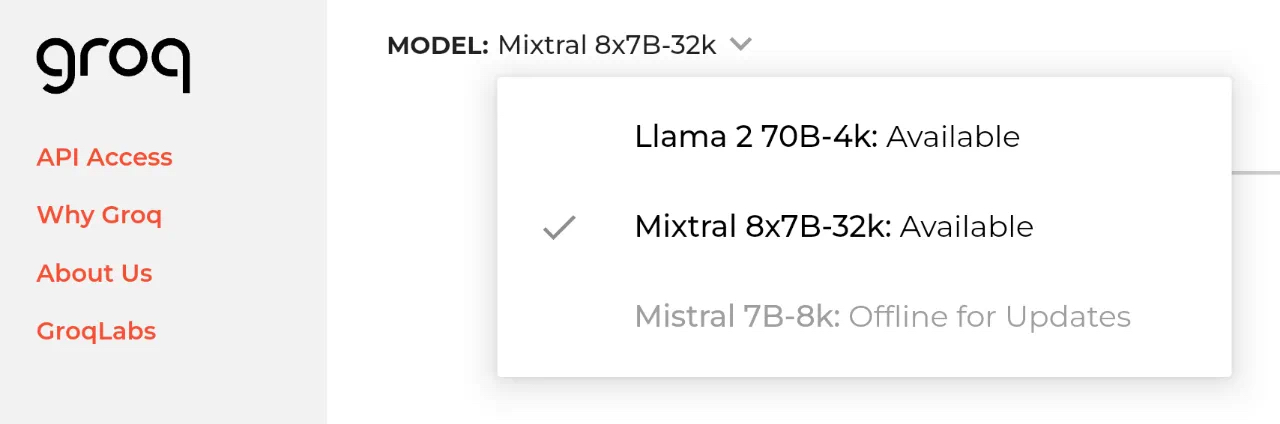

Groq's official website is very straightforward, with no registration, no payment, just a prompt input box and model options.

In the model options, we can see two choices: Llama 2 70B-4K and Mixtral 8x7B-32K. You can choose either to start your experience!

Below is the comparison of response speeds between Groq and OpenAI, showing Groq's response speed significantly surpassing OpenAI!

Groq announces a speed of 500 tokens/s! Approximately 25 times the speed of ChatGPT (around 20 tokens per second on GPT-4) and about 10 times that of Gemini 1.5 (running at about 50 tokens per second)!

Groq is not humble at all, directly challenging Meta and OpenAI! (You may wonder why not Google? Keep reading for a hint.)

I also tried it firsthand! Groq's speed is highly recommended; pressing Enter gives you an article instantly, an experience worth trying! In fact, my test showed speeds even faster than the official 500T/s!

How Effective is Groq?

Groq uses Llama 2 70B-4K and Mixtral 8x7B-32K models. Here's a comparison with GPT-3.5:

From the table, we can see Mixtral 8x7B leads in 4 out of 7 benchmarks compared to GPT-3.5, demonstrating its strong performance!

Groq also offers API pricing cheaper than OpenAI:

Why is Groq So Fast?

Groq's speed is not reliant on GPUs but on their self-developed LPU, priced at $20,000 each! (An astronomical price!)

Here’s a segment from Groq's social media introduction about their LPU, providing a basic understanding. Those in the field can also look at their technical report for insights!

Our chip focuses on matrix and vector operations, but GPUs excel at these too. A huge issue in token generation is the time each token takes to pass through the network, becoming a bottleneck. To speed up, you need faster calculations. After exhausting all obvious options (faster accelerators, higher voltage, etc.), it's a challenge. GPUs often wait for the correct data to arrive for further processing, exacerbated by multiple threads and complex prediction logic. This method works but wastes energy. With Groq's deterministic chip and system, we know data's location at any point, ensuring it’s sent to the right place on time, ensuring high computational logic utilization, eliminating prediction logic, and wasting less energy. You also know exactly how long each run takes. For more info, check out this Reddit comment.

Can Groq Replace Current GPUs for Faster Training?

Groq engineers honestly state:

It may speed up training, but due to CUDA, Nvidia is likely the best choice for training. Groq excels in large-scale inference.

World's Fastest Inference

Compared to top cloud providers, Groq showcases 18 times faster LLM inference performance on Anyscale's LLMPerf leaderboard. Meta AI’s Llama 2 70B on Groq LPU™ inference engine outperforms all other cloud-based providers by 18 times in output token throughput. (A truly leading speed!)

And their tech team comes from Google's TPU team (explaining the hint earlier, they are one family!)

Conclusion

Groq's emergence marks the first shot in the computing power revolution at the start of the year. Google’s new Gemini 1.5 model shows extended context capabilities (which we will introduce in the next article), and OpenAI’s Sora demonstrates the team's exploration on the path to AGI!

AI promises more surprises in 2024! Stay tuned!

Enjoy! Groq experience link: https://groq.com/