🟢 Knowledge Prompting

Whether incorporating external knowledge can enhance commonsense reasoning remains an open question. A series of studies have shown that the integration of external knowledge can improve the performance of models on tasks. Knowledge Prompting does not require supervision for the specific task of knowledge integration, nor does it need access to structured knowledge bases. Instead, Knowledge Prompting can directly generate knowledge from the language model and then use this knowledge as additional input when answering questions.

As the authors put it, "We propose a simple yet effective method to obtain knowledge statements (i.e., knowledge expressed in natural language) from general language models in a few-shot scenario."

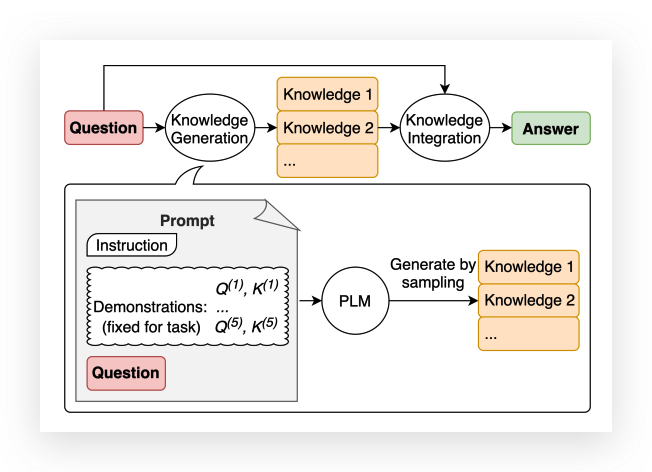

Knowledge Prompting mainly consists of two stages:

- Generating knowledge statements related to the question from the language model using a small number of demonstrations.

- Using a second language model to make predictions for each knowledge statement, then selecting the prediction with the highest confidence.

Knowledge Generation

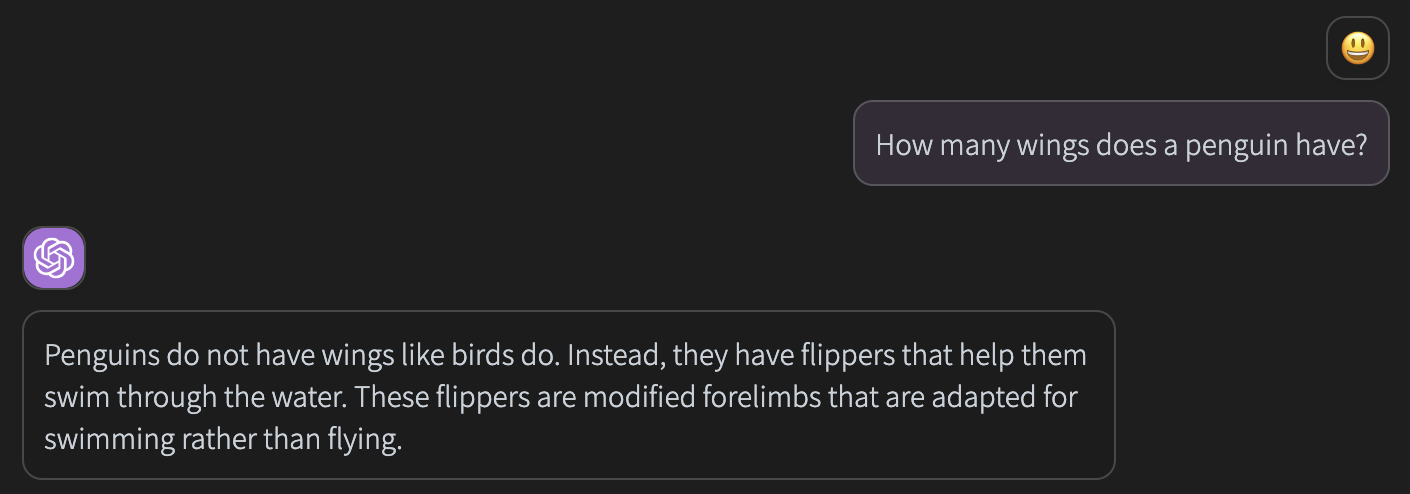

Here we intend to use ChatGPT to respond to commonsense questions:

ChatGPT thinks that penguins do not have wings.

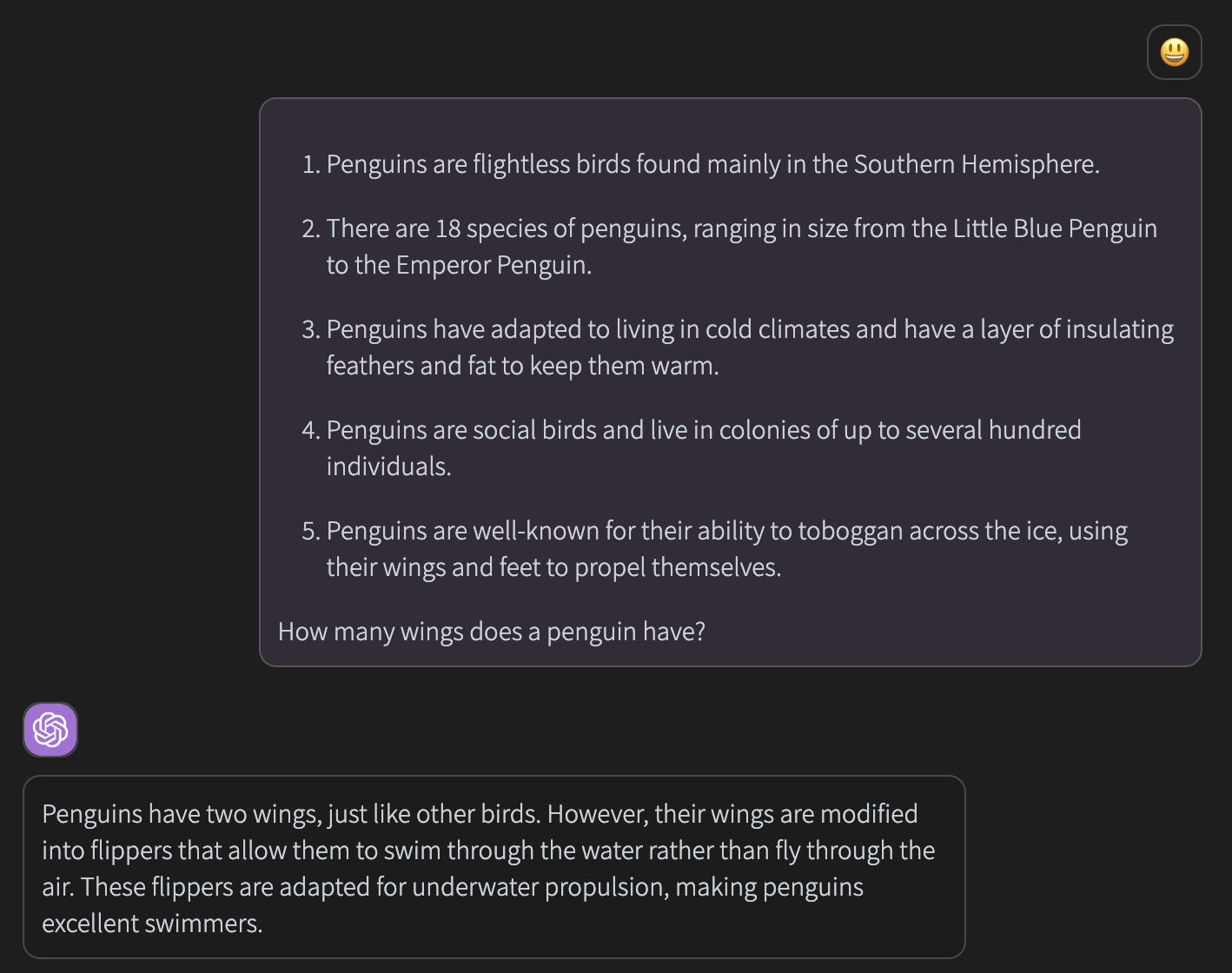

Next, we let ChatGPT generate some knowledge about penguins:

Knowledge Injection

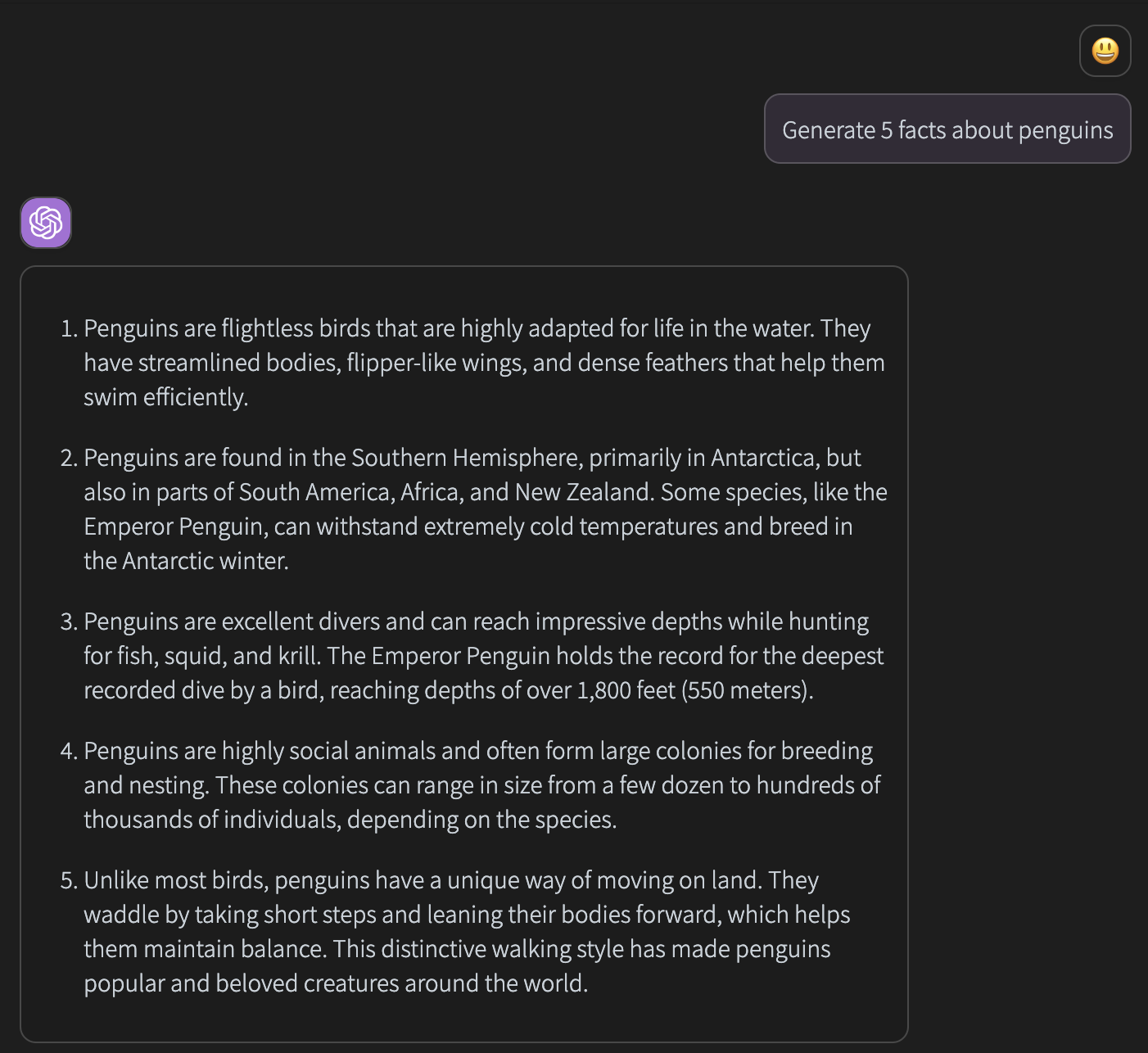

At this point, we re-ask ChatGPT the question by injecting external knowledge:

This time, the penguin finally got back its two wings. 😂