🟢 Why Use ChatGPT API?

Quoting the AI pioneer Andrew Ng: "A system needs much more than a single prompt or a single call to an LLM (large language model)."

Advantages of the API:

- Deeper Integration: With the API, you can integrate ChatGPT into your own systems and workflows, achieving deeper customization and control.

- Personalized Responses: You can adjust the model's responses according to specific needs and scenarios, such as by changing the temperature and maximum tokens to control the creativity and level of detail of the model.

- Batch Processing: If multiple prompts or LLM calls are needed, the API allows for batch processing and parallelization, achieving more efficient operations.

- Security Compliance: Through the API, you can ensure the security of data during transmission and processing, and meet compliance requirements for specific industries or regions.

API

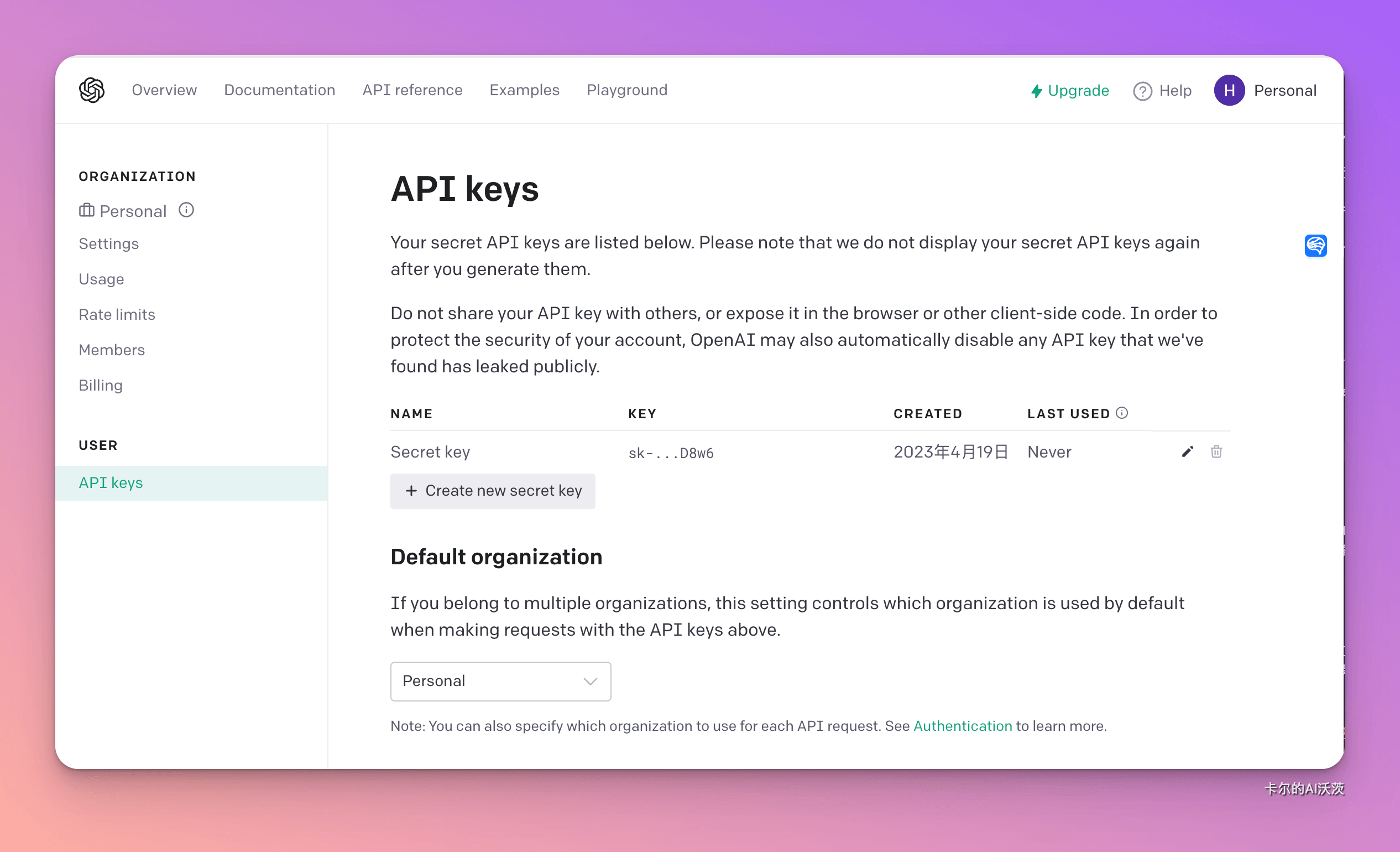

- OpenAI account access API Key application site

- Create API Key, multiple keys can be created. Click "Create new secret key" as shown below, and copy and save it after creation, as it will not be visible after closing the window.

API calls are charged, but OpenAI has provided us with a $5 free usage.

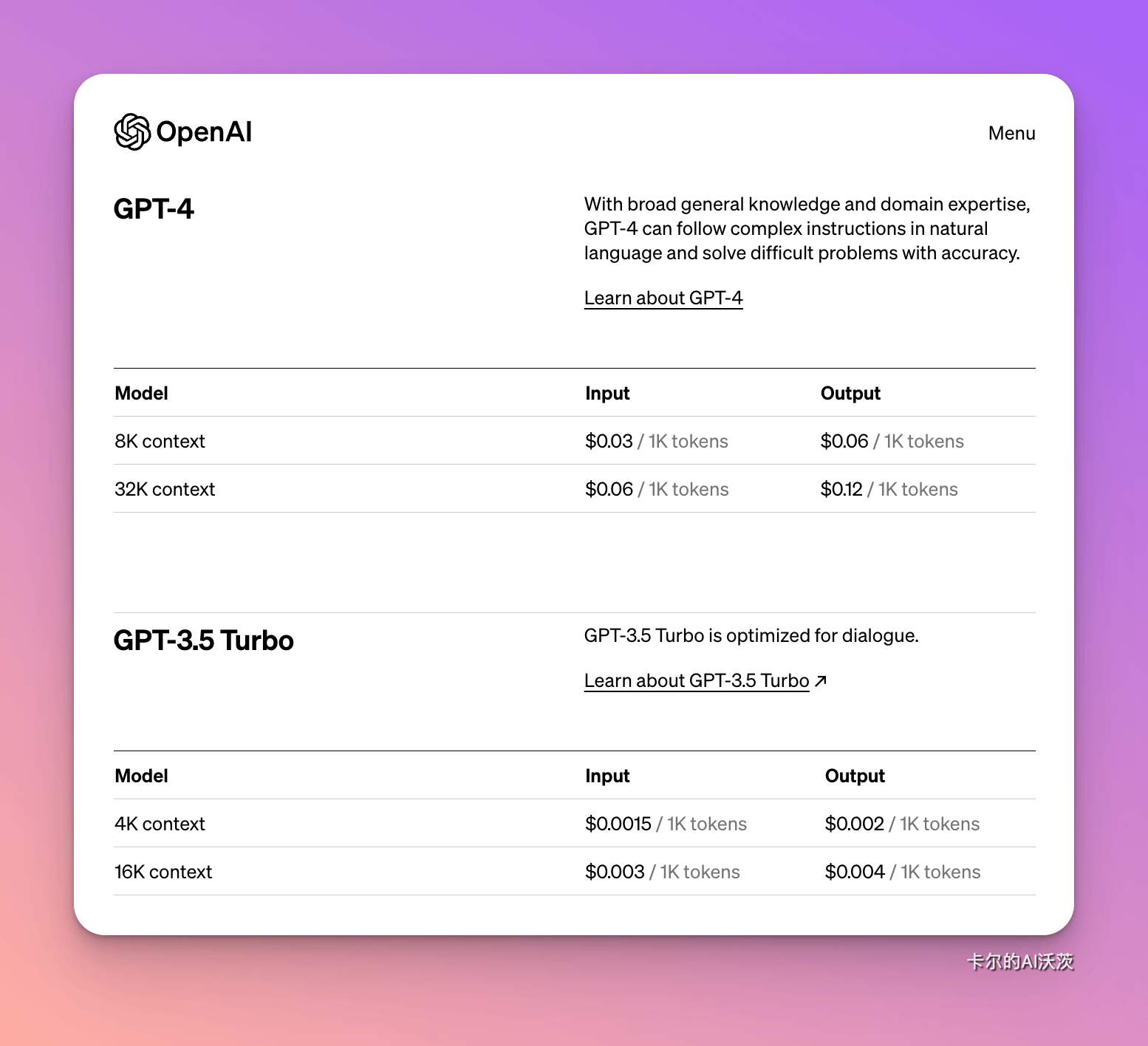

Click link to visit the complete price list.

Rate Limits

OpenAI enforces rate limits on the number of requests you can make to the API. These apply to the number of tokens per minute, the number of requests per minute (or daily requests in some cases), or the number of images per minute in the case of image models.

There are several reasons for implementing rate limits:

- They help prevent abuse or misuse of the API. For example, a malicious actor might flood the API with requests, attempting to overload it or cause service disruptions. By setting rate limits, OpenAI can prevent such activities.

- Rate limits help ensure fair access to the API for everyone. If one person or organization makes too many requests, it could clog the API for others. By limiting the number of requests a single user can make, OpenAI ensures the maximum number of people can use the API without experiencing slowdowns.

Below are some rate limits. For a detailed list, click link to visit.

To lift rate limits, you can fill out a usage evidence form, collect 10 business days of usage data at the current rate limits, and then submit a formal rate limit increase request for OpenAI's review and approval.

Excellent Software Supporting API

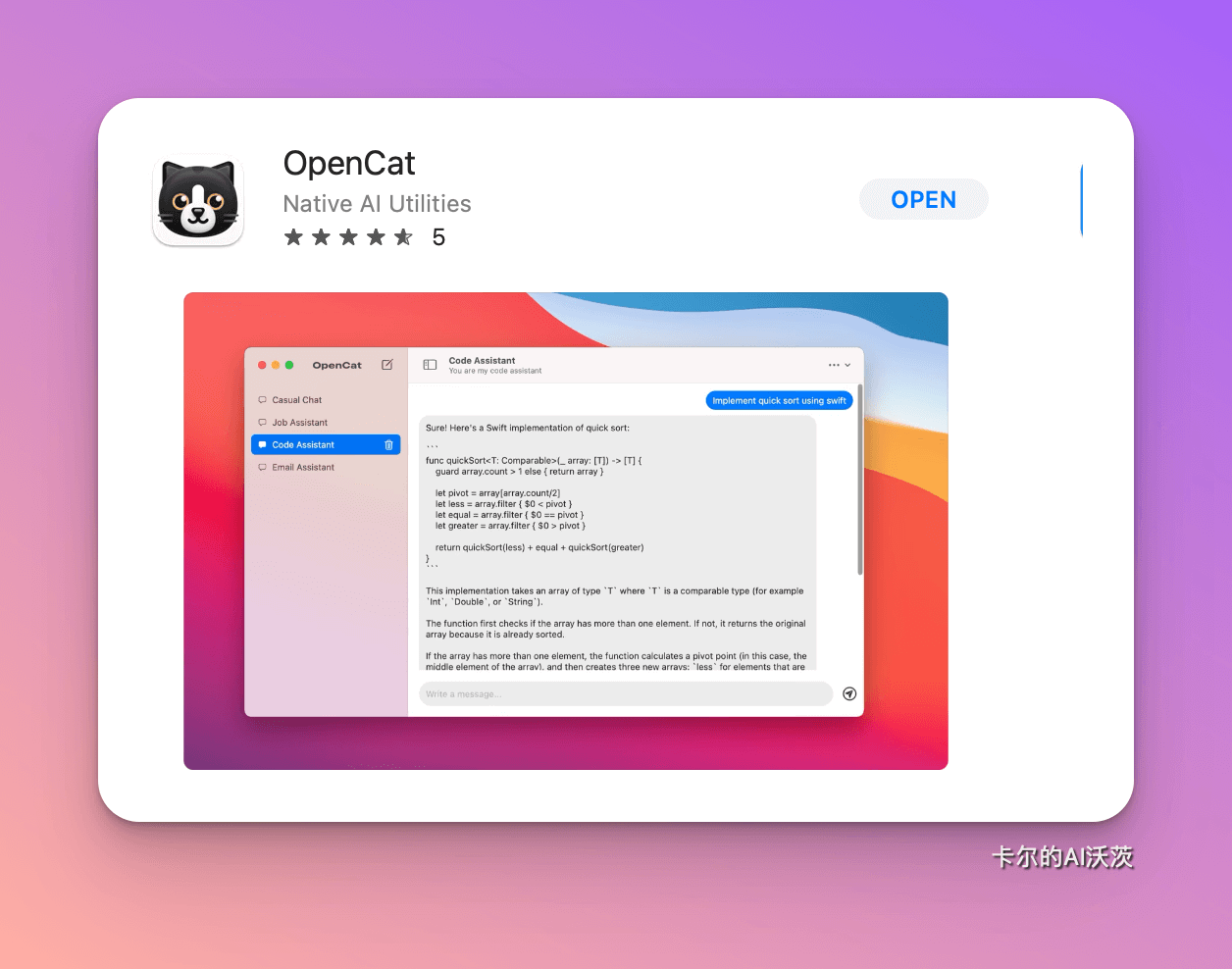

OpenCat: A local desktop ChatGPT client that supports iOS and macOS. Compared to ChatGPT's $20 monthly subscription fee, the API cost for light use is lower.

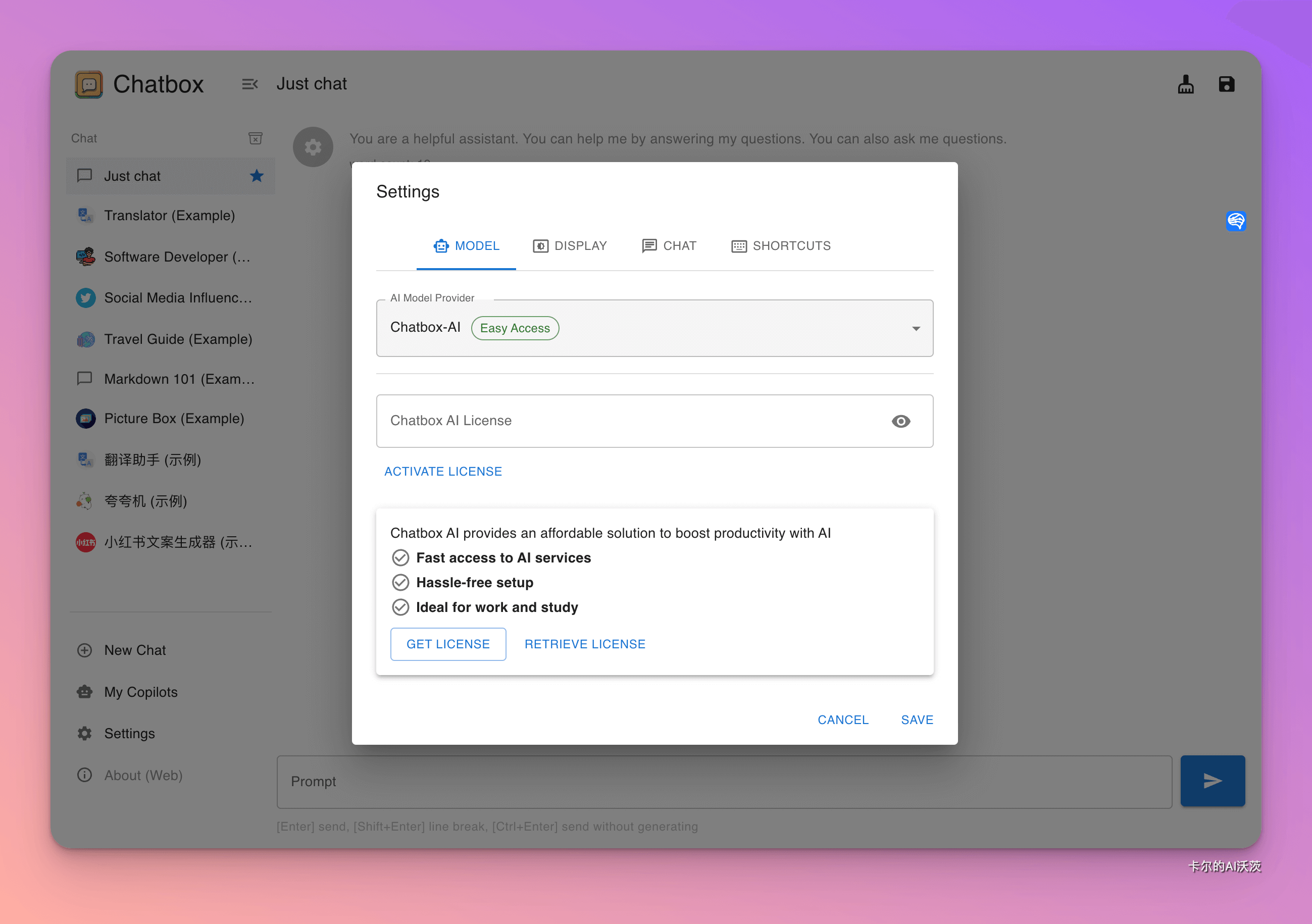

ChatBox: An open-source, free OpenAI API desktop client that supports Windows, macOS, and Linux. Users can customize the KEY and API Host address, save chat records locally, manage multiple sessions, and set different Prompts.

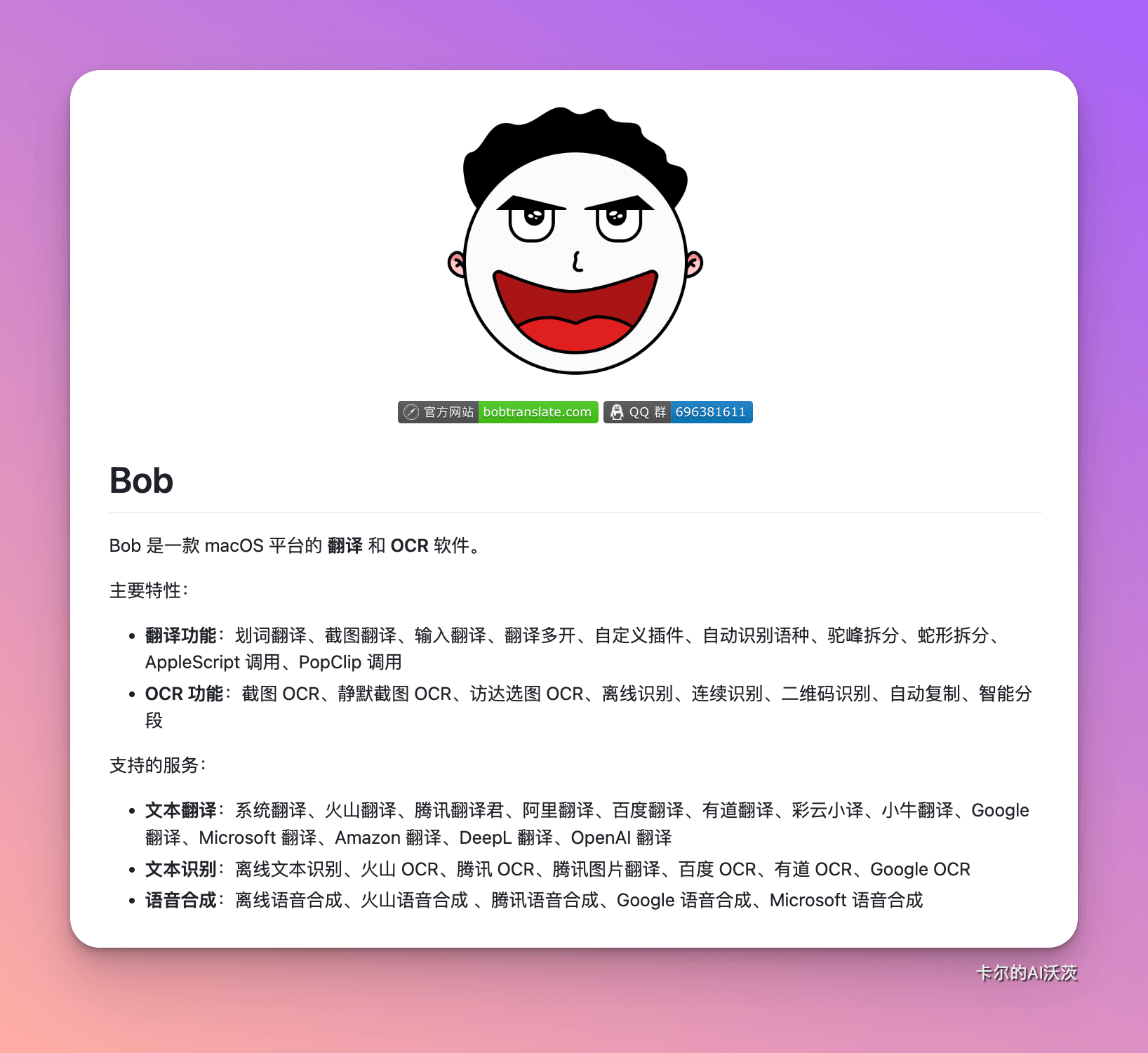

Bob: An excellent translation software for macOS. After the gpt-3.5-turbo update, the translation speed has significantly improved.

PopClip: A "text selection operation enhancement tool" on macOS. After integrating the API key, selecting text will pop up an AI button, achieving "one-click" to get ChatGPT's help and answers.

bilingual_book_maker: Uses the OpenAI API to translate e-books, costing about $3 per book.

xiaogpt: Integrates ChatGPT into Xiao Ai, adding fun.

For more tools and applications based on the ChatGPT API, visit Awesome ChatGPT API.

About Tokens

In large language models, "token" plays a critical role. It is the smallest unit in text, which can be a word, punctuation mark, number, or symbol. Let's dive into the importance of tokens in natural language processing:

- Tokenization

- Definition: Tokenization is the process of breaking down sentences or text into tokens.

- Application: The model receives a string of tokens as input and attempts to predict the most likely next token. Tokens can be converted into vector representations through embedding operations for processing in neural networks.

- Token Count Limit

- Key Limit: The number of tokens is a key limitation of large language models like ChatGPT.

- Personalized Interaction: A larger context length allows the model to query the user's context and data, achieving more personalized interaction.

- Accuracy and Fluency: A large context window makes the model more accurate, fluent, and enhances the model's creativity.

- Token Limits for Different Models

- GPT Models: According to official documentation, different GPT models have different token limits. For example, 32K is the token limit for the model.

- Claude-2-100k Model: The Claude-2-100k model, which competes with ChatGPT, has a context limit of 100k, allowing you to input several books at once!

Why Understand Token Limits

Understanding token limits is important when using large language models because it affects two key aspects:

- Input Limit for Current Conversation:

- The content you input at one time cannot exceed the model's token limit. This means you must express your needs accurately and concisely within the given token range.

- Overall Context Length Limit:

- In a complete conversation, your input plus the model's output cannot exceed the model's token limit. If it exceeds the limit, the earliest part of the conversation will be forgotten, which may cause the initial prompt you set to fail.

If the prompt itself is too long, consider setting it in English and asking the model to respond in Chinese. This can save tokens, leaving more room for dialogue.